[Webinar] Master Apache Kafka Fundamentals with Confluent | Register Here

エンタープライズグレードのストリームガバナンス

データを発見し、理解し、信頼する

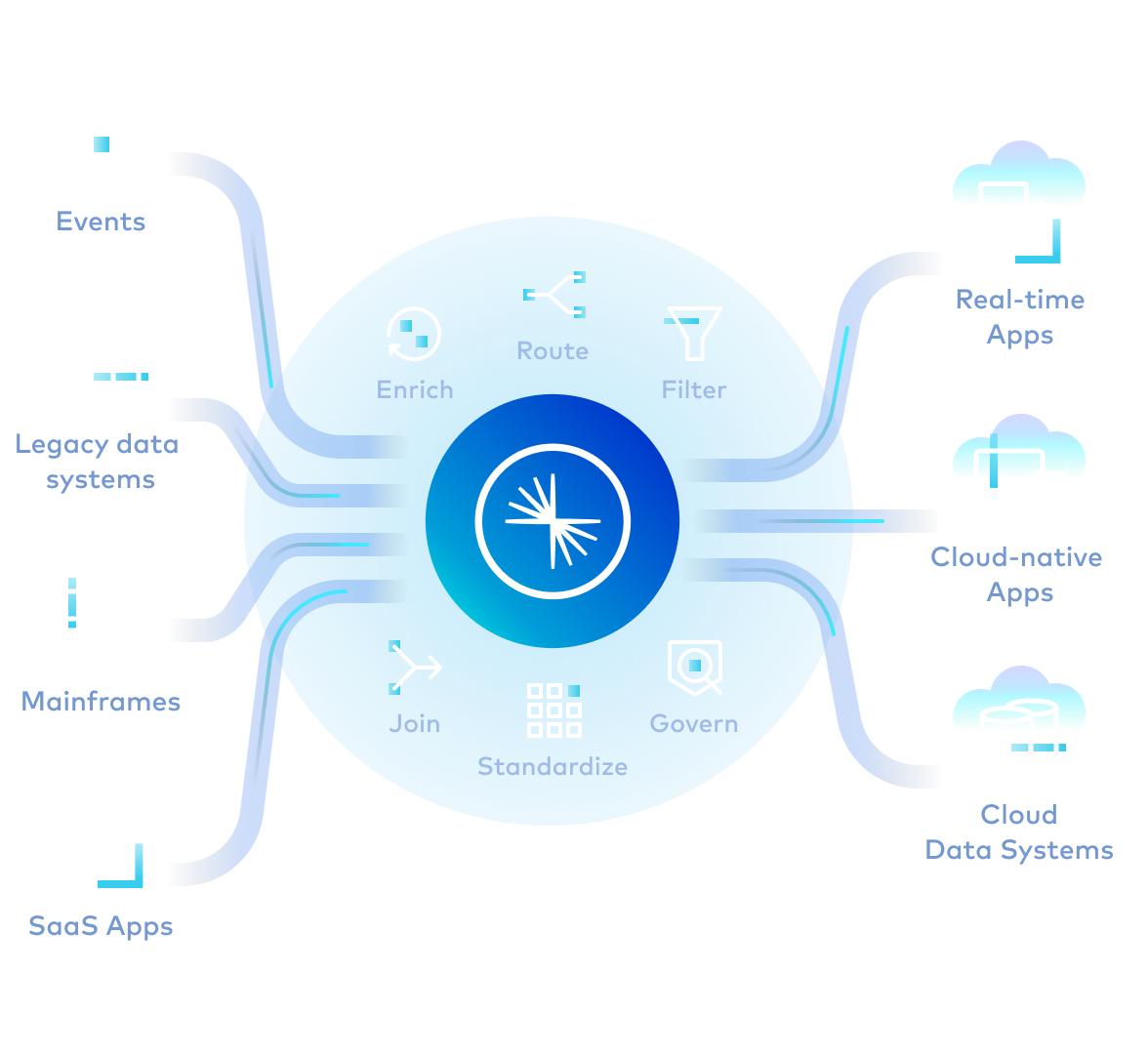

Stream Governance は、アプリケーション、分析、AI 全体のデータの構造、品質、フローを可視化し、制御します。

Stream Governance はデータの品質、発見、リネージを統合します。データコントラクトを一度定義し、データが作成されるときにそれを適用することで、バ��ッチ処理後ではなく、リアルタイムでデータプロダクトを作成できます。

バッチ処理後ではなく、ソースでデータ品質を実施

Stream Quality は、不正なデータがデータストリームに入るのを防ぎます。

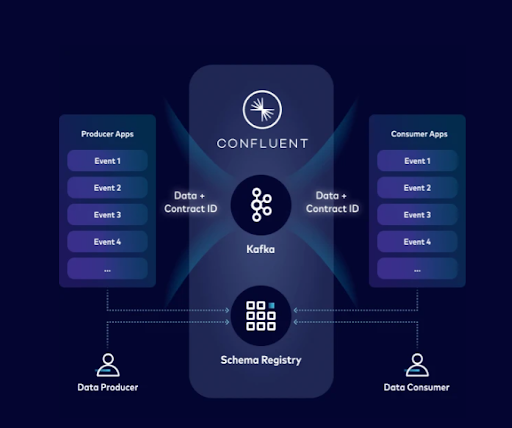

プライベートネットワーク内のプロデューサーとコンシューマー間のデータコントラクト (スキーマ、メタデータ、品質ルール) を管理および適用します。

Schema Registry

すべてのデータストリーミングトピックに対し、バージョン管理されたリポジトリでユニバーサルスタンダードを定義・適用

データコントラクト

データストリームにセマンティックルールとビジネスロジックを適用

Schema Validation

特定のトピックに割り当てられたメッセージが有効なスキーマを使用していることを Brokers が確認

スキーマリンク

クラウドおよびハイブリッド環境間でスキーマをリアルタイムで同期

クライアント側フィールドレベル暗号化 (CSFLE)

クライアントレベルでメッセージ内の特定のフィールドを暗号化することにより、最も機密性の高いデータを保護

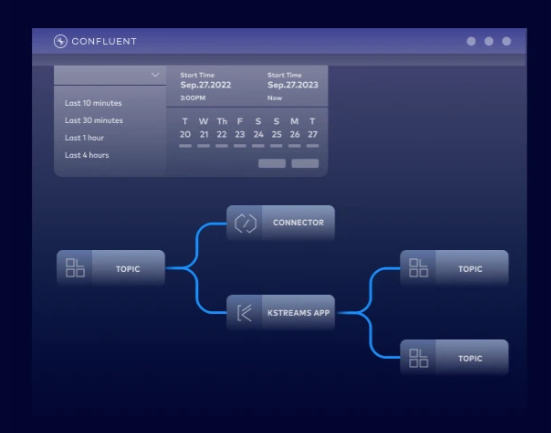

データフローを監視し、問題を数分で解決

任意の数のデータプロデューサーが共有ログにイベントを書き込むことができ、任意の数のコンシューマーがそれらのイベントを独立して並行して読み取ることができます。__依存関係なしで、プロデューサーまたはコンシューマーを追加、進化、回復、拡張できます。 __Confluent はレガシーシステムと最新システムを統合します。

セルフサービスアクセスを利用して市場投入までの時間を短縮

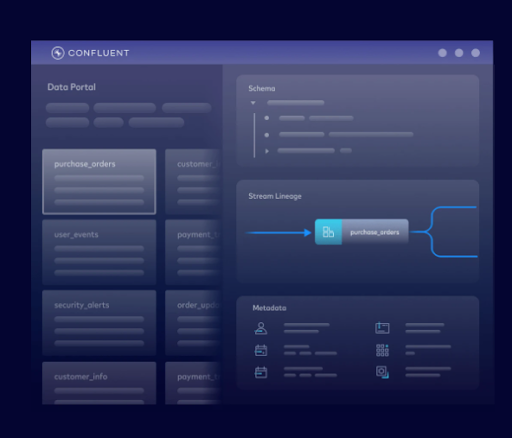

Stream Catalog は、Topics を、運用、分析、AI システムがアクセス可能なデータプロダクトとして整理します。

メタデータのタグ付け

チーム、サービス、ユースケース、システムなどに関するビジネス情報で Topics を充実化

Data Portal

エンドユーザーが UI を通じて各データプロダクトを検索、クエリ、発見、アクセスをリクエストし、表示できるようにします。

データの充実化

データストリームの使用と充実化、クエリの実行、ストリーミングデータパイプラインの作成を UI で直接実行

プログラムによるアクセス

Apache Atlas ベースの REST API と GraphQL API による Topics の検索、作成、タグ付け

あらゆる環境やアーキテクチャでデータを将来にわたって保護

アプリケーション、分析、AI の__下流ワークロードを壊すことなく、変化するビジネス要件に対応できます__。

安全なスキーマ進化

既存のダッシュボード、レポート、ML モデルとの互換性を維持しながら、新しいフィールドを追加し、データ構造を変�更し、形式を更新

互換性チェックの自動化

下流の分析アプリケーションや AI パイプラインの中断を防ぐために、デプロイ前にスキーマの変更を検証

柔軟な移行パス

特定のアップグレード要件と組織の制約に基づいて、下位互換モード、上位互換モード、または完全互換モードを選択

ACERTUS は Schema Registry を利用して、基本コードを変更することなく、注文契約の編集や追加を容易にします。スキーマと Topics データの変更はリアルタイムで記録されるため、データユーザーが探しているデータを見つけたら、そのデータが正確で信頼できるものであることを信頼できます。

Confluent は、Stream Governance によってデータの品質とセキュリティを確保し、Vimeo がビジネス全体でデータ製品を安全に拡張および共有できるようにします。

「処理を具体的にどう行うかを心配する必要がないと、これほどに多くのことが実現できるのかと驚かされます。Confluent は、セキュリティ、Connectors、Stream Governance などの数多くの付加価値機能を備えた、安全で堅牢な Kafka プラットフォームを提供してくれるので、信頼できます。」

新規開発者は、最初の30日間で$400分のクレジットを獲得できます — 営業担当者とのやり取りは不要です。

Confluent は、必要なすべてを提供します。

- 構築:Java や Python などのクライアントライブラリ、サンプルコード、120以上の事前構築済みコネクタ、Visual Studio Code 拡張機能を活用して開発可能です。

- 学習:オンデマンドコース、認定資格、世界中のエキスパートコミュニティから学べます。

- 運用:CLI、Terraform や Pulumi 向け IaC サポート、OpenTelemetry による可観測性を活用できます。

以下のクラウドマーケットプレイスアカウントでサインアップするか、当社で直接サインアップできます。

Confluent Cloud

完全マネージド型、クラウドネイティブの Apache Kafka® サービス

Stream Governance With Confluent | FAQS

What is Stream Governance?

Stream Governance is a suite of fully managed tools that help you ensure data quality, discover data, and securely share data streams. It includes components like Schema Registry for data contracts, Stream Catalog for data discovery, and Stream Lineage for visualizing data flows.

Why is Stream Governance important for Apache Kafka?

As Kafka usage scales, managing thousands of topics and ensuring data quality becomes a major challenge. Governance provides the necessary guardrails to prevent data chaos, ensuring that the data flowing through Kafka is trustworthy, discoverable, and secure. This makes it possible to democratize data access safely.

What components are included in Confluent’s Stream Governance?

While open source provides basic components like Schema Registry, Confluent offers a complete, fully managed, and integrated suite. Stream Governance combines data quality, catalog, and lineage into a single solution that is deeply integrated with the Confluent Cloud platform, including advanced features like a Data Portal, graphical lineage, and enterprise-grade SLAs.

How is Confluent’s approach different from open source Kafka governance?

Yes. Confluent Schema Registry supports Avro, Protobuf, and JSON Schema, giving you the flexibility to use the data formats that best suit your needs.

Does Stream Governance support industry standards like Avro and Protobuf?

Yes, Confluent Stream Governance provides robust support for industry-standard data serialization formats through its integrated Schema Registry.

Schema Registry centrally stores and manages schemas for your Kafka topics, supporting the following formats: Apache Avro, Protobuf, JSON Schema.

This ensures that all data produced to a Kafka topic adheres to a predefined structure. When a producer sends a message, it serializes the data according to the registered schema, which typically converts it into a compact binary format and embeds a unique schema ID. When a consumer reads the message, it uses that ID to retrieve the correct schema from the registry and accurately deserialize the data back into a structured format. This process not only enforces data quality and consistency but also enables safe schema evolution over time.

Can I use Stream Governance on-premises?

The complete Stream Governance suite, including the Data Portal and interactive Stream Lineage, is exclusive to Confluent Cloud. However, core components like Schema Registry are available as part of the self-managed Confluent Platform.

Is Stream Governance suitable for regulated industries?

Yes. Stream Governance is suitable for regulated industries and provides the tools you need to ensure data security and compliance with industry and regional regulations. Its Stream Lineage features help with audits, allowing you to use schema enforcement and data quality rules to ensure data integrity. Across the fully managed data streaming platform, Confluent Cloud also holds numerous industry certifications like PCI, HIPAA, and SOC 2.

How can I get started with Stream Governance?

You can get started with Stream Governance by signing up for a free trial of Confluent Cloud. New users receive $400 in cloud credit to apply to any of the data streaming, integration, governance, and stream processing capabilities on the data streaming platform, allowing you try Stream Governance features firsthand.

We also recommended exploring key Stream Governance concepts in Confluent documentation, as well as following the Confluent Cloud Quick Start, which takes you through how to deploy your first cluster, product and consume messages, and inspect them with Stream Lineage.